scite – a «super power» for researchers?

Uno de los problemas más largos no resueltos del análisis de citas es que, si bien es fácil obtener el número de citas, el contexto de cada cita es difícil de determinar sin leer el documento. ¿Es una mención de su artículo una mera mención? ¿O el documento de referencia tiene resultados que apoyan o incluso contradicen su documento?

Scite intenta resolver este problema a gran escala procesando millones de artículos de texto completo, aplicando modelos de aprendizaje profundo para tratar de clasificar las citas por si están «apoyando», «mencionando» y «contradiciendo».

scite – a «super power» for researchers? Interview with Josh Nicholson, CEO , scite

by Aaron Tay

In 2018, I started noticing an increasing number of startups that focused on applying machine learning in the Libraries and Scholarly Communication arena. There were tools such as Scholarcy, Paper Digest and Get the Research that auto summarizes articles, while companies like UNSILO were applying machine learning to publisher manuscript submission systems like ScholarOne.

Part of the reason for this happening could be simply due to the overall increase in the effectiveness of machine learning algorithms in the past 5 years but equally important is the growing increase in content that is open – both in full text and metadata thanks to the open access and open data movement that provides the “fuel” that makes machine learning possible for startups.

One of the startups that caught my eye recently is scite. One of the long unsolved problems of citation analysis is that while it is easy to obtain citation counts, the context of each citation is difficult to determine without reading the paper. Is a citation to your paper a mere mention? Or does the citing paper have results that support or even contradict your paper?

scite attempts to solve this problem at scale by processing millions of full text articles, applying deep learning models to try to classify citations by whether they are “supporting”, “mentioning” and “contradicting”

I find the idea fascinating and had an opportunity to try out an early closed beta version of scite.

You can try out scite yourself now that it is publicly released.

One of the co-founders and CEO of scite, Josh Nicholson, kindly agreed to answer some of my questions in this interview for readers of this blog.

Q: Could you briefly explain your background and the reason you started working on scite?

I have a PhD in cell biology from Virginia Tech, where I studied the effects of aneuploidy on chromosome mis-segregation in cancer cells (scite report). In addition to my research on cancer, I have always been fascinated by how research itself is performed and would often do some basic analyses looking at funding, peer review, and scientific publishing. Through this research and, perhaps most importantly, through my personal experiences of publishing as a grad student, I became frustrated with how many aspects of science worked and wanted to fix some of the problems that I was seeing. This lead me to launching a publishing platform called The Winnower, which aimed to make publishing more transparent by employing open post-publication peer review. The Winnower later merged with Authorea, a document editor for researchers and, ultimately, was acquired by Atypon last year. The idea behind scite was actually conceived during the time I was running the Winnower, and the initial concept was published there nearly five years ago as a response to the increasing awareness of the reproducibility crisis. In short, my co-founder Yuri Lazebnik and I would know within our narrow fields of research which reports had been supported or contradicted by subsequent studies. However, people outside of our field would not be able to find these reports because they would often be buried amongst an avalanche of citations and, conversely, if we went outside of our fields, we would not know which studies had been supported or contradicted for the same reason. We wanted to surface research that actually tested claims and to measure it so that reliable research could be assessed better and encouraged.

The idea and the platform have come a long way since then and we’re excited to actually get real feedback from users now that it’s open!

Q: I understand scite is currently in beta and basically covers life science articles. What sources does scite draw content from? Can we expect coverage to improve in the future?

scite requires full-text documents in order to extract and analyze citation statements. We use content from a variety of sources, including academic publishers, PubMed Central, university repositories, and authors. We have ingested 5.5M articles so far and have millions more to process, so we expect coverage to improve quite a lot over the coming weeks, especially in disciplines outside of the life sciences.

Q: As we know machine learning is never 100% reliable and scite is still quite new, what are the accuracy rates (eg recall, precision) can we expect for the classifications for “supporting”, “mentioning” and “contradicting” in scite? Are there any benchmarks you are hoping to hit in the future?

We are writing a preprint now, in which we will share these numbers as well as more technical details about scite! Thus, I would prefer to wait to share that as I think the context behind these numbers are important. What I can say is that we are close to human level accuracy for the three classifications with contradicting being the hardest and mentioning the easiest.

Q: In your view what are some ways researchers, administrators, funders, publishers or librarians etc can use scite to help them in their work?

I think scite unpacks a tremendous amount of information that is contained within citations into an easily digestible format. This allows researchers, funders, publishers, and librarians to make better informed decisions, which can save time and money.

In more practical terms, I have called scite a “super power” for researchers, because it allows researchers to uncover studies they might otherwise overlook, can help them ease information overload and improve reproducibility. It can also help them with writing their own articles as they can use scite before they cite to make sure they are not citing un-reliable (or retracted) studies. Finally, researchers can use scite to show colleagues and funders that their work has been independently supported, something we are already seeing researchers do and something we hope to facilitate in the future by introducing the option of creating individual profiles.

For funding organizations and for the reviewers of grants and papers, scite can help identify researchers that have reliable track records–has this researcher consistently been supported or contradicted in the past? That information, while not perfect, is better than just looking at journal names and citation counts alone and could really change the discussion to looking at the reliability, not just the impact, of research.

For publishers, we think we can help them help their readers by providing a badge that shows if work they are publishing has been supported or contradicted. Similarly by integrating with scite, they can help their authors show how reliable their work is.

For librarians, scite is a powerful learning and discovery tool that can be shared with researchers at their institutions. Thus, scite is something they can provide researchers to help with research. scite can also help librarians track the publications of researchers at their institutions.

Q: What are the main priorities for improving scite? Are there any upcoming features you can share that we can look forward to?

Our main priorities are increasing the coverage of scite by ingesting more papers, and improving the accuracy of our classifier. I am happy to say that we are making great progress on both of these fronts. We will be improving search to make it more powerful and flexible. Finally, and I am probably the most excited about this upcoming feature, we will be releasing a plugin that will help researchers use the information provided by scite, anywhere they are reading a scientific paper online.

Q: Where does the funding for scite come from? Though it might be still early days, do you have any preliminary thoughts on the business model that scite will operate on?

Funding for scite comes from private investors. We hope that scite will provide enough value to its users to make the company self-sustainable. Our aim is to build a sustainable, growing business that continues to make science more reliable by helping scientists and anyone who funds, directs, or studies science, as well as to the public interested in science. While we’re not hiring yet, we hope to be soon and would encourage anyone with interest in this space to reach out!

Q: How do you see scite evolving in the next 3 years?

I think science is one of humankind’s most important endeavors. We want to help make science better by making it more reliable. Over the next three years we hope that scite will become as integral to scientists and science as reading scientific papers is now by developing a system that provides researchers what they need to evaluate research

Q: Is there anything librarians can do to help with scite?

Libguides! No, but seriously, I think librarians can help scite out by helping make researchers aware that they can scite use for writing better papers, for more efficient literature review, and for teaching. Our biggest problem right now is that most scientists simply don’t know about us!

Thank you. All the best for scite in the future, Josh.

[Texto tomado de musingsaboutlibrarianship.blogspot.com]

Visit scite>>

Te puede interesar

Programa del X Congreso Nacional de Ciencias Sociales

comecso - Feb 23, 202623 al 27 de marzo, 2026 | Posgrado de la Facultad de Contaduría y Administración, Universidad Autónoma de Chihuahua, Campus…

Convocatoria Feria del libro

Laura Gutiérrez - Feb 18, 2026FERIA DEL LIBRO X CONGRESO NACIONAL DE CIENCIAS SOCIALES “Las Ciencias Sociales frente a las incertidumbres actuales” INVITACIÓN Información general…

Hoteles con convenio | X Congreso Nacional de Ciencias Sociales

Laura Gutiérrez - Ene 28, 2026X Congreso Nacional de Ciencias Sociales Las Ciencias Sociales frente a las incertidumbres actuales del 23 al 27 de marzo…

Memorias del IX Congreso Nacional de Ciencias Sociales

Roberto Holguín Carrillo - Jul 02, 2025IX Congreso Nacional de Ciencias Sociales Las ciencias sociales y los retos para la democracia mexicana. Realizado en el Instituto…

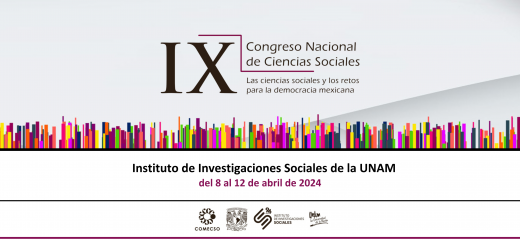

Curso Filosofías y Religiones de Asia

Laura Gutiérrez - Feb 20, 2026Universidad Nacional Autónoma de México, Programa Universitario de Estudios sobre Asia, África y Oceanía Curso Filosofías y Religiones de Asia:…

Información turística | X Congreso Nacional de Ciencias Sociales

Laura Gutiérrez - Feb 20, 2026Restaurantes Cafetería de conta PV45+F7, Campus Uach II, 31125 Chihuahua, Chih. Corinto Café C. Colegio San Marco 1828, Misión Universidad…